Ship AI workflows and Agents to production faster

Iterate rapidly, orchestrate reliably, and scale with complete control. With a developer-first approach, Inngest handles the infrastructure, so you can focus on building AI applications — not backend complexity.

Trusted by companies innovating with AI:

Built to support any AI use case

Meet the demands of complex AI workflows

Inngest simplifies the orchestration of AI agents, ensuring your applications run reliably and efficiently in production. From complex agentic workflows to long-running processes, Inngest provides the tools you need to focus on building AI applications while leaving the complexities of orchestration to us.

Reliable orchestration

Handle workflows across multiple models, external tools, and data sources with full observability and retries to gracefully manage failures.

Efficient resource management

Use step.ai.infer to proxy long-running LLM requests, reducing serverless compute costs while gaining enhanced telemetry.

Rapid iteration

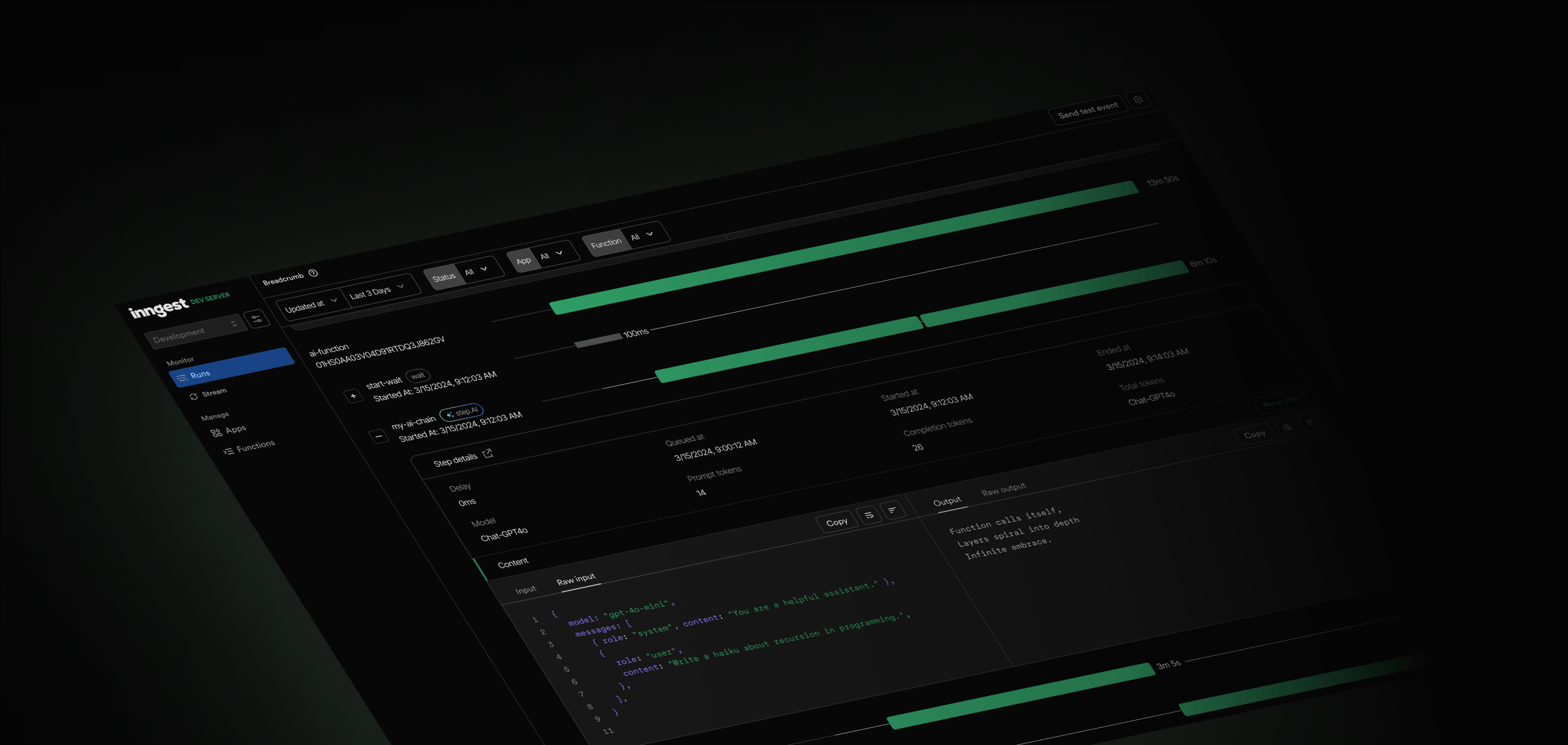

Debug agentic workflows locally with Inngest's Dev Server for faster development and testing.

Scalable for production

Deliver AI applications with the reliability and observability needed to understand and optimize customer workloads in production.

Focus on AI engineering

Use Inngest's SDKs, including AgentKit, to define workflows in code and leave orchestration complexities to us.

AgentKit: The fastest way to build production-ready AI workflows

AgentKit is a framework for building and orchestrating AI agents, from single-model inference to multi-agent systems, enabling reliable AI at scale.

Simplified orchestration

Define complex AI workflows in code, including agentic orchestration, and let the AgentKit handle the heavy lifting of managing dependencies, retries, and failures with ease.

// Define simple agents

const writer = new Agent({

name: "writer",

system: "You are an expert writer. " +

"You write readable, concise, simple content.",

model: openai({ model: "gpt-4o", step }),

});

// Compose into networks of agents that can work together

const network = new Network({

agents: [writer],

defaultModel: openai({ model: "gpt-4o", step }),

})Locally debug for faster iteration

Debug and test your workflows locally with Inngest's Dev Server, providing the tools to iterate quickly and refine agentic workflows before shipping to production.

Production-grade reliability

AgentKit workflows are production-ready with reliable orchestration, full observability, and the ability to seamlessly integrate external tools and models

Introducing step.ai APIs

Seamlessly integrate reliable, retryable steps and achieve full observability across your AI applications and agentic workflows. These APIs are designed to simplify development and production, empowering you to iterate rapidly and ship production-ready AI products with confidence.

Extend existing code reliability with step.ai.wrap

Wrap any AI SDK with step.ai.wrap to ensure reliable execution of AI tasks. Gain complete visibility into request and response data, with built-in retries to handle failures gracefully and keep workflows running smoothly.

Secure inference with step.ai.infer

Offload inference requests securely to any inference API using step.ai.infer, powered by Inngest's infrastructure. This reduces serverless compute costs while providing enhanced observability into every inference request and response.

import { generateText } from "ai"

import { openai } from "@ai-sdk/openai"

export default inngest.createFunction(

{ id: "summarize-contents" },

{ event: "app/ticket.created" },

async ({ event, step }) => {

// This calls generateText with the given arguments, adding AI observability,

// metrics, datasets, and monitoring to your calls.

const { text } = await step.ai.wrap("using-vercel-ai", generateText, {

model: openai("gpt-4-turbo"),

prompt: "What is love?"

});

}

);

Case Study

Aomni: Productionizing AI-driven sales flows using serverless LLMs

Learn how Aomni built and scaled their AI research analyst to deliver deal-critical insights, analysis and automations to strategic sellers.

Aomni leverages Inngest's platform for orchestration of chained LLM calls, including tree of thought, chain of thought, and retrieval augmented generation (RAG).

“For anyone who is building multi-step AI agents (such as AutoGPT type systems), I highly recommend building it on top of Inngest's job queue orchestration framework, the traceability it provides out of the box is super useful, plus you get timeouts & retries for free.”

Learn more about Inngest

Explore how Inngest's orchestration and tooling can help you bring your AI use case to production.

AgentKit

Learn how to use AgentKit to build, test and deploy reliable AI workflows.

View documentationThe principles of production AI

How LLM evaluations, guardrails, and orchestration shape safe and reliable AI experiences.

Read articleAgentic workflow example: importing CRM contacts with Next.js and OpenAI o1

A reimagined contacts importer leveraging the power of reasoning models with Inngest

Read articleAI early access program

Be the first to get access to and stay in the loop about our latest AI features, including step.ai, AgentKit and more.